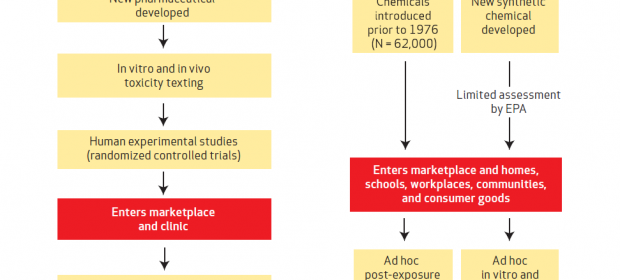

Differences between the streams of evidence used in making medical interventions vs. decisions in chemicals policy. Click to enlarge.

Differences between the streams of evidence used in making medical interventions vs. decisions in chemicals policy. Click to enlarge.

Yesterday I participated in a very interesting webinar presenting preliminary findings of a forthcoming case study, applying the Navigation Guide methodology to evidence that PFCs may be reproductive toxins.

For those not already aware, the Guide is a US EPA- and University of California San Francisco-led project looking at how methods used for evaluating study quality and conducting systematic review in medicine can be applied to evidence that environmental pollutants may harm health. They have a published paper you can read here, though the really juicy stuff is in the appendix. (Annoyingly these used to be freely available, though that seems not to be the case any more.)

The data will not of course be public until the study is published, but some themes in yesterday’s discussion are worth thinking about ahead of time.

Blinding, allocation and expectation bias

In medical trials, it is essential that the people conducting the research have no role in deciding which trial participants get treatment and which get placebo. A system involving very modern random number generators and very old-fashioned slips of paper in sealed envelopes is used to make sure the researchers have no idea whether a participant is assigned to a treatment or control group.

Researchers also have to be blinded (not literally!), meaning they don’t know if the animal they are observing has been subjected to treatment or is in the control group.

This is to address expectation bias: the propensity of people to find what they expect to find. The direction of the bias is almost always towards the hypothesis being tested (it would be pretty weird to be biased towards something you weren’t expecting to find). The degree to which expectation bias exerts an effect can be quantified by comparing the findings of blinded studies with unblinded ones.

What was particularly interesting from the PFC case study was that, of the group of studies suitable for meta-analysis, none employed random allocation and only one employed blinding.

The situation is far from unique to animal studies used in chemical toxicology. There is evidence that only 28% of papers describing drug tests using animals report random allocation to treatment groups and only 2% of papers reported that observers were blinded to treatment. (Landis et al. 2012)

Responding to expectation bias

Expectation bias really needs to be eliminated. Blinding might not be standard practice (someone on the call said researchers like to know what is happening to their animals while it is happening) but (as someone else pointed out) there is no reason in the world to assume that a PhD student examining animals in a lab is going to be any less influenced by expectation bias than a PhD student in a hospital examining that other kind of animal, a human being.

Does it mean all the data in the is rubbish? No: it means it is flawed. Because even flawed studies can have value, what one has to do is weight them accordingly and make it absolutely crystal clear to your reader what weight you give them and why. That is the purpose of the Navigation Guide: to recognise limitations in data, but not to throw the baby out with the bathwater.